Hash Tables

This note is part of my learning notes on Data Structures & Algorithms.

Introduction

Hash tables are a type of data structure that allows fast INSERT/DELETE/SEARCH operations. Each operation will take constant time .

- Insert(k): Insert key into the hash table.

- Lookup(k): Check if key is present in the table.

- Delete(k): Delete the key from the table.

Let be the universe of all keys, the size of this universe is , where is really big. In a hash table of size , each key is mapped to one of “buckets” by a hash function.

A hash function is a mathematical function that takes an input and returns an output of fixed-size string of bytes, which is typically a hash value (maps elements of to buckets ). This value is often represented as a hexadecimal number.

Examples of hash function:

- division

- multiplication

- universal hashing

- dynamic perfect hashing

- static perfect hashing

This technique called hashing determines an index or location for the storage of an item in a data structure.

Random Hash Functions

We want a hash table to not have too many buckets (to save space), and we want the items to be spread-out in the buckets (enable fast operations). Hence, we incorporate randomness in hashing. There are two ways to do so:

- Assume that the set of keys stored in the hash table is random, or

- Assume that the hash function is random.

Let there be number of keys, be the size of the hash bucket that maps to. The expected cost of performing any of the operations INSERT/DELETE/SEARCH with a random hash function is:

When is random,

Algorithm:

- Choose any items , and any sequence of

INSERT/DELETE/SEARCHoperations on these items. - Choose a random hash function .

- Hash it out.

Collisions

Suppose there’s an array of some size and a hash function . A collision is when for two different keys and .

Claim: for any hash function , if , there exists a set of elements that all hash to the same location.

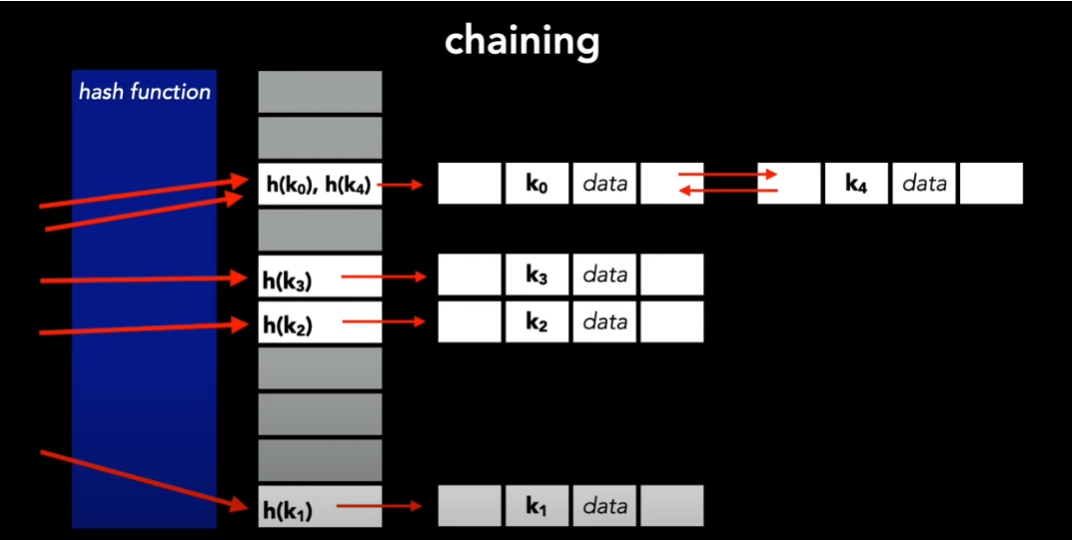

We can handle collision with a method called separate chaining, by having each entry in be a linked list. To insert an element, we just put it at the top of the list. If is a good function, then we hope that the lists will be small.

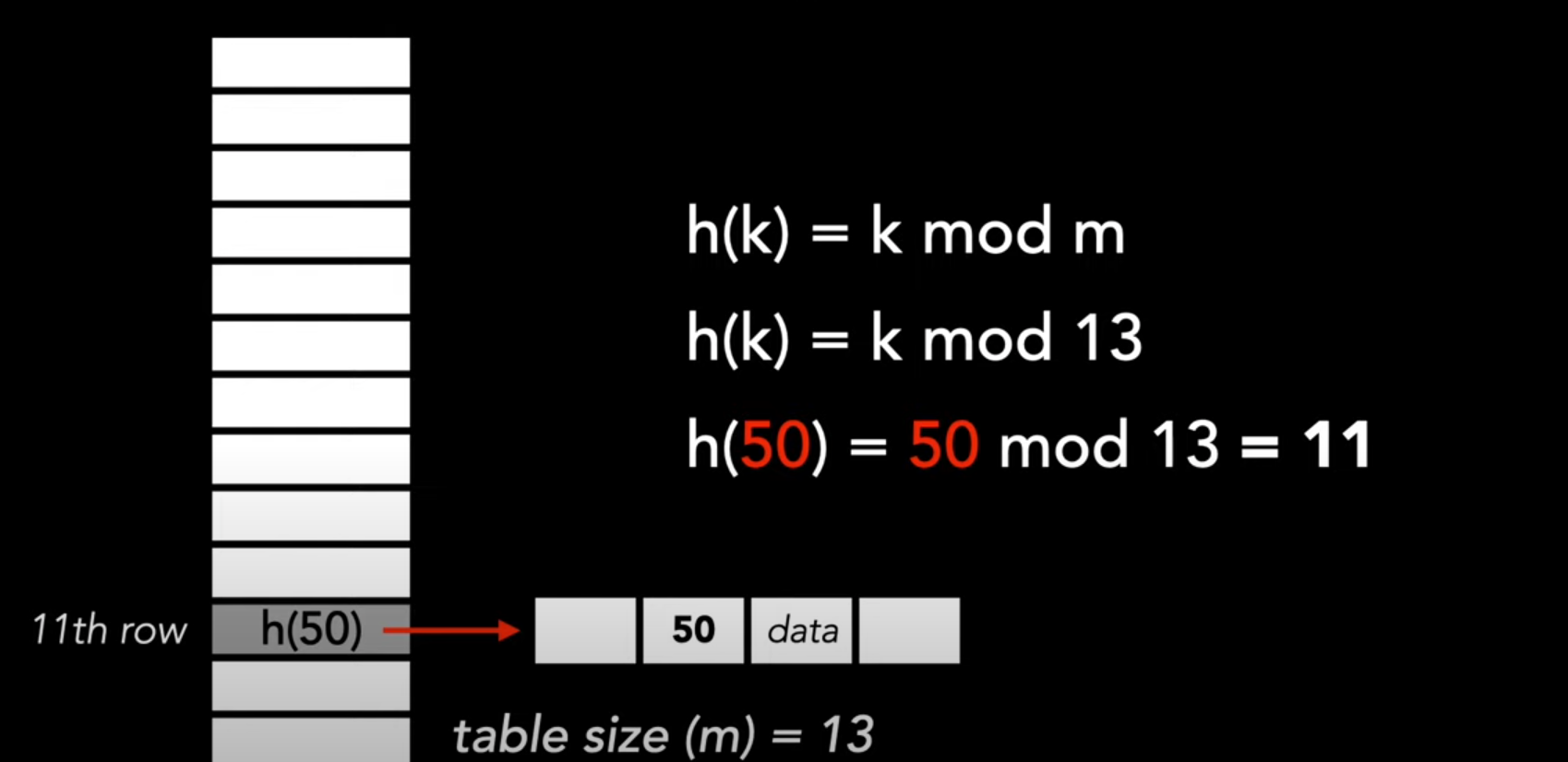

Hashing By Division

Suppose there is a hash table of size , using division, the hash function is:

Universal Hashing

A uniformly random hash function can lead to balanced buckets, decreasing the number of collisions, and ensuring all INSERT/DELETE/SEARCH operations take constant time. But it’s not a good idea to use this method because we will have to store them and there’s of them. Writing down a random one of them takes bits, which is .

The solution is to pick from a smaller set of hash functions. This chosen subset collection of hash functions is called a hash family . When is chosen uniformly at random from , it is said to be universal if: for each pair of different keys and , the the probability that they hash to the same value (collision) is at most .

Formally: is universal if:

For a small universal hash family of size , we need only bits to store it.

Properties of Universal Hashing

- Low Collision Probability: Universal hashing ensures that the probability of any two distinct keys colliding is low, specifically at most .

- Uniform Distribution: When a hash function is chosen randomly from a universal family, the hash values are uniformly distributed over the range, reducing the chances of clustering.

- Independence: The choice of hash function is independent of the keys, making it less likely for an adversary to predict collisions.

- Efficiency: Universal hash functions can be computed efficiently, typically in constant time .

Theorem

For arbitrary distinct keys, and for random hash function chosen from universal hash family

Matrix method

The matrix method is a way to construct a universal hash family.

Perfect Hashing

Perfect hashing is a type of hashing that guarantees no collisions for a given set of keys, providing optimal performance with lookup time.

We say a hash function is perfect for a set if it maps all elements of to distinct values

such that

Types of Perfect Hashing

- Static Perfect Hashing: A perfect hash function where a set of keys is fixed, and the function ensures no collisions among these keys.

- Dynamic Perfect Hashing: A perfect hash function that can handle a changing set of keys without collisions.

Properties of Perfect Hashing

- No collisions: by definition, a perfect hash function guarantees no collisions among the keys in the set

- Efficiency: optimal performance with lookup time.

Example: Balls And Bins Model

The balls and bins model is a probabilistic model used to analyze random allocation or distribution of objects. In this model, a set of balls represent distinct objects, and a set of bins represent distinct containers or possibilities.

I. No Collision (Birthday Paradox)

Suppose we throw balls randomly into bins. (Here the balls represents the keys to be hashed and the bins represent the slots in the hash table)

Probability that no two balls collide:

This shows us how large needs to be to have no collisions.

Max Load

Suppose , which means we throw balls randomly into bins. What is the maximum number of balls in any bin?

Example: Bloom Filter

A Bloom filter is a space-efficient, probabilistic data structure that is based on hashing. It is typically used to add a hash of the elements to a set to determine whether that element belongs to a set. Example: checking the availability of a username.

A Bloom filter is similar to a hash table, where it will use a hash function to map a key to a bucket. However, it will not store that key directly in that bucket, instead it will hash it into multiple hash functions and simply mark the bucket as filled. Hence some keys might map to the same filled bucket, causing false positives.

False positives occur when an element is not present in the original set, but the Bloom filter mistakenly indicates that it is. The probability of a false positive depends on the number of elements inserted into the filter and the capacity of the filter

Steps to using a Bloom filter:

- Get input

- Calculate hash value

- Mod the hash

- Insert the hash

- Lookup the value

Conclusion

A hash table can be designed such that it

- has hash function that maximizes randomness and produce least amount of collisions

- supports

INSERT/DELETE/SEARCHoperations in expected time - requires bits of space.

- buckets

- items with bits per item

- to store the hash function

U: universe

u: number of possible keys in universe

n: number of actual keys to store in hash table

k: key

x,y: a pair of distinct keys

m: size of hash table / number of slots in table / number of buckets

References

- Stanford CS161: Hashing

- CMU 15-451: Universal and Perfect Hashing

- [Brilliant: Bloom Filter](https://brilliant.org/wiki Bloom-filter/)

- [Geeksforgeeks: Bloom Filter](https://www.geeksforgeeks.org Bloom-filters-introduction-and-python-implementation/)

- Duke University CPS102: Probability in hashing