Generative AI & LLMs

This page contains my notes on learning Generative Artificial Intelligence & Large Language Models.

Learning Materials

Prerequisites: Basic understanding of machine learning concepts such as classification, regression, neural networks, loss functions, hyperparameters.

Introduction

Generative AI is a subset of traditional machine learning. The machine learning models underlying Generative AI have learn to create content that mimics human ability by finding statistical patterns in massive datasets of content that was originally generated by humans.

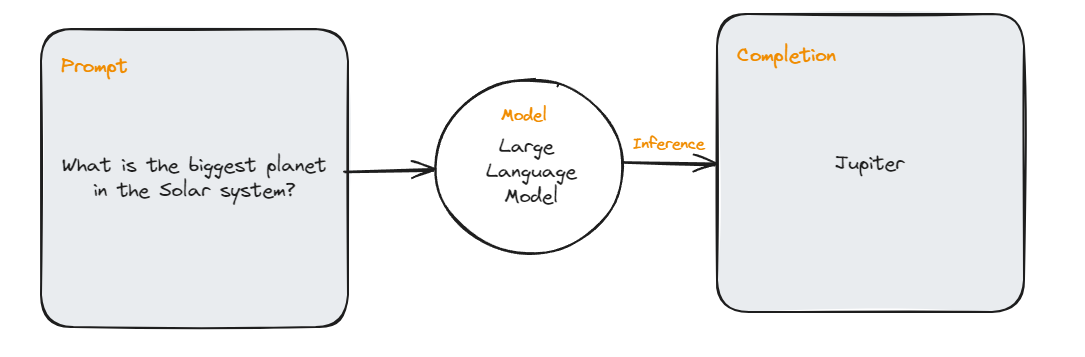

Traditional machine learning models require users to write computer code with formalized syntax to interact with libraries and APIs. In contrast, Large Language Models (LLMs) are able to take natural human language or written instructions to perform tasks, just like how a human would. The text that you pass to a LLM is known as a prompt, and the act of using the model to generate text is know as inference, the output text is known as completion.

Some examples of Large Language Models are:

- GPT

- BERT

- LLaMa

- FLANT-T5

- BLOOM

- PaLM

- Jurassic

Large Language Models are used in a wide variety of situations, including:

- Language translation (natural <-> natural / code <-> natural)

- Information retrieval (ask the model to retrieve specific data from large dataset)

- Text classification

- Question & answering

- Sentiment analysis